The Friction Myth

On Seamlessness, Offloading, and the End of Epistemic Struggle in the Age of Chat

Ten days ago, a friend sent me Kyla Scanlon’s reflections on friction. (Which I recommend you, also, read). It took me ten days to read the article, or, rather, to open it in earnest, and another thirty minutes to read and take notes. Which is to say, there is nothing particularly significant about those ten days other than they are evidence to a point I will attempt to outline in this essay: life is full of rough, sharp, blunt, bumpy, you name it, edges, which impose friction; friction is unavoidable (and good).

Despite the archetype I grew up seeing in media of the go-getter professional who wakes at 4 a.m., swims laps, reads the Wall Street Journal cover to cover by 6 a.m., and is at the office by 7 a.m., life is, in fact, never so simple nor productive. Life is, and always has been, full of friction. I know this because it takes me at least an hour after my hair gets wet to look presentable for work. And if I start getting dressed before I’m done blow drying my hair, the humidity-induced sweating will force a full outfit change. Otherwise, I, too, would be my childhood idol, C.J. Cregg.

Scanlon’s premise is that friction has been automated out of our lives, or at least, that we’ve been sold that idea, effectively. But as I read, I kept circling a different problem: not friction’s removal, but its mis-recognition. The article isn’t primarily about that, but it’s where my attention landed. I’m less interested in whether friction is gone (it isn’t) and more concerned with what happens when it’s misnamed (by the trillion dollar PR machine that has been feeding us a notion that “seamlessness” is not only desirable but also right around the proverbial corner of emerging tech).

These are the distortions that I think happens when we internalize the story that in fact, ‘friction has been automated out of our lives’:

First, the cognitive work of thinking (developing ideas, making judgments, holding contradictions, knowing why there is not an “and” after the serial comma that precedes this final noun phrase) is quietly replaced by the production of output. The tool completes the sentence, and we accept it as the completion of the thought.

Second, friction is rebranded, not as difficulty worth engaging, but as inefficiency to be optimized away. Over time, the distinction between difficulty and inefficiency blurs, and the mandate to eradicate both only strengthens.

And, finally, the illusion is that the effort has vanished, when in fact it has only been aestheticized, outsourced, or internalized.

As an academic, I contest that the effects of these compounding distortions are, increasingly visible in how we teach, how we write, and how we make sense of ourselves as thinkers.

As a technologist, I do not believe the solution is to pretend these tools do not exist. That pedagogical posture has never held. People use what is available to them.

Instead, what interests me is whether we can learn to tolerate the discomfort of actual thinking while also learning to use these tools in ways that do not foreclose the possibility of thought. If friction is not going away, and, as noted by Scanlon, we are deep in an era of styling it to look like it has, our goal as technologists who still remember what it was like to wrestle all by ourselves to find and articulate our ideas need to strive to understand what it means to think in a world that is trying very hard to convince us that the thinking has already been done, by the LLM.

Of course, the fantasy of seamlessness isn’t new. Capitalism has long promised that friction can be optimized away, if not by technology, then by discipline, scheduling, and personal willpower. The reason C.J. Cregg tripping on her treadmill during her “me time” is funny1, is that it is relatable. A Sorkin-esque visual metaphor for a deeper pattern we all know: friction will always find us, and we will always trip.

This is the myth we’re living inside and it is twofold: (1) friction can be conquered through effort or interface and (2) if it isn’t, we won’t trip. But it can’t, and we will.

The forms change, the mechanisms evolve, but someone always stumbles. And that is ok. So the real task isn’t to eliminate friction. It’s to remain cognitively intact despite all efforts to disguise it. To resist the seductive logic that tools that afford efficiency are sufficient for developing skills that demand difficulty.

So let’s look more closely. If friction hasn’t disappeared, where has it gone? And what are the consequences of not recognizing its new forms?

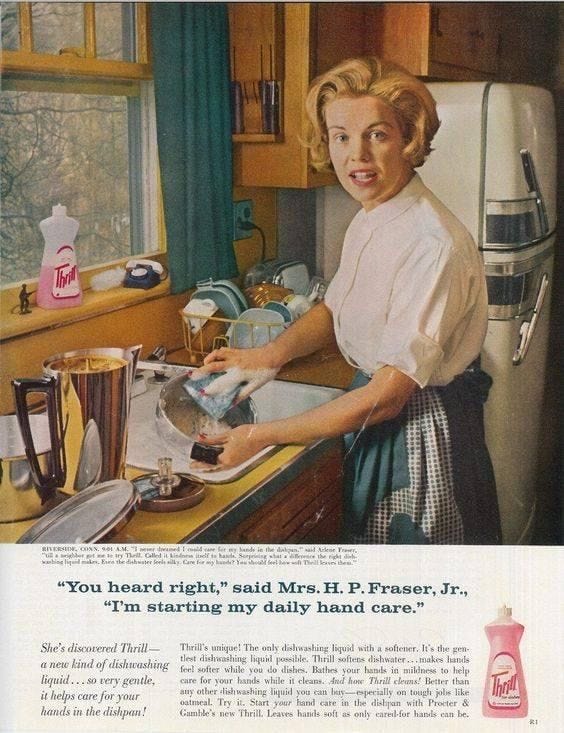

There’s a long history of technologies that promised to reduce friction but instead redistributed it. In the domestic sphere, the microwave and the dishwasher were marketed as time-saving devices, liberators from the tedium of housework. And in many ways, they were. But they also restructured what counted as effort. The time saved did not reduce expectations, it shifted them. What were once seen as optional tasks or conveniences became new baselines. Scholars in feminist science and technology studies have long mapped how such technologies reframe labor and autonomy, often deepening the burdens they promised to relieve.

I don’t intend to retrace their arguments here. Rather, I am interested in their echo in modern technologies like generative AI and LLMs (aka chatbots). They are not the first to displace friction while masquerading as its eradication. They are merely the latest.

Much like the first law of thermodynamics, friction cannot be created or destroyed, only transformed from one form to another.

A few months ago, I was in the car with my uncle when we were rear-ended in traffic. Thankfully no one was hurt. We pulled over, exchanged information, and then, after the shock had passed, called the insurance company on speakerphone from the car. What followed was not a conversation so much as a polite deferral. The representative thanked us for reporting the incident, explained that they’d log the claim, and then said, almost cheerily, “We’ll send a link to your phone. You can upload everything there, photos, paperwork, the works.”

In other words: your problem, your labor.

After we hung up, while keeping his eyes fixed on the road, my uncle said to me with a kind of paternal clarity in the tone of someone passing down a piece of generational knowledge, “Don’t forget, once upon a time, companies paid people to do what they’re now asking you to do.”

I haven’t stopped thinking about that sentence. Because during the course of my life (which is about the same age as the modern tech history) there’s been a quiet, steady redistribution of responsibility from institutions to individuals. Not just responsibility, but labor. And not just labor, but effort. Effort that gets framed as “convenience.”

And to do this, this re-meaning making, we’ve been trained to interpret these moments (i.e., submit your own documentation, find your own forms, troubleshoot your own issues) not as burdens, but as upgrades.

The friction is GONE!

It’s seamless, streamlined!!

You are in control!!

The insurance rep even said, “It’ll just be easier, we’ll text you the link.” But easier for whom? The work didn’t go away. It just became invisible. Unpaid. And, ours.

I’m not the first to notice this shift. But I do think it’s worth saying plainly: when a company invites you to “self-serve,” what they’re often doing is offloading paid labor onto you, cloaked in the language of autonomy. And now, increasingly, it is the displacement of the worst type of friction there is: thought. A pattern which surfaced, perhaps most insidiously, in the rise of generative AI.

Part of the reason LLMs feel so helpful, so seamless, is because the friction they alleviate is real. Writing is hard. Coding is hard. Math is hard. But the kind of difficulty they displace, perhaps even more so than the technologies that came before, is what we might call ‘formative friction.’ The kind that of friction doesn’t just slow you down, but institutes what you know.

What I mean is: the work of learning is not to perform competence, but to develop it. Memorizing your times tables is useful only insofar as you understand what multiplication is. If you can’t tell me what six times eight means, your recall is trivia, not knowledge. And if you are not interested in memorizing it just for the party trick, then I don’t know what to say other then your incompetence is not even hiding at all.

In this way, the displacement of friction LLMs afford is more than practical. It is epistemic. The technology doesn’t just ease the burden, it confuses us about what the burden was to begin with.

The challenge of writing, for instance, was never just about putting words on a page. It was, and is, about the internal work of locating the shape of a thought. Of wrestling with the distance between what you almost mean and what actually mean. (And getting from almost to actually sometimes this takes drafts, and drafts, and drafts, edits, and edits, and edits - both your own, and others). That work is effortful. That work takes time. That work is frictional by its very nature. And now, that friction is something LLMs encourage you to bypass.

You prompt the model, and it returns a sentence. Or five. I never imagined “be more succinct and cogent” would become such a mainstay in my daily conversations with a machine. And yet. The output is smooth. People say it writes at the graduate level, and I tend to agree. Chat’s outputs sound like something you might have written, in any case it sounds pretty damn close to what you almost mean, and since no one actually knew what you actually intended on saying (how would they, how would you, you were never quite able to articulate that, were you?). And in that moment, where something like what you mean has been said and said better then you may have been able to say the thing you actually mean, you accept the sentence as the thought itself. As your thought.

This is the crux of the displacement: the substitution of output for process, product for progeny. In our century-long quest to collapse the distinction between difficulty and inefficiency, we’ve created the conditions to rebrand intellectual difficulty as inefficiency outright.

And now, we are walking, fuck it, sliding down a path of erosion. Not just of skill, but of the capacity to tolerate not knowing. To stay with the friction that begets our capacity to think.

There’s so much more to say here that I had hoped to say herein - about the aesthetic performance of seamlessness, the quiet restructuring of how we relate to knowledge, the hidden labor that props up these systems, and the growing intolerance for discomfort that seems to shadow those growing up alongside the stratospheric rise of tools like ChatGPT. (Maybe I’ll turn this into a series.)

With that, all the best staying with the friction.

As always,

Caseysimone

For what it’s worth, I don’t find C.J. Cregg falling particularly funny myself (I don’t like watching women get hurt, I don’t find it particularly smart as a comedic device, and the video’s title, Run, crazy lady, only underscores that discomfort … but that’s another essay).

This reminded me of my nascent crank theory (alright not really, mostly stolen from Hasken & Westlake's 2018 book "Capitalism without Capital") that US GAAP vs IFRS accounting standards for software development costs are an underrated variable in all the nutty stuff coming out of the US tech industry from time to time. Basically, GAAP has historically made it easier and more likely for companies to capitalize "software development costs" (SDCs) as intangible assets, rather than expensing them in the period incurred, if and when the development meets a standard for "technological feasibility" starting on page 5 here: https://storage.fasb.org/fas86.pdf

The tech industry unsurprisingly lobbied for this accounting standard because it reduced firms' cost of capital - proponents argue that this is because it reduced information asymmetry, perhaps a kind of friction: https://journals.sagepub.com/doi/abs/10.1177/0148558x0802300208

Also unsurprisingly, tech stocks are notoriously "overvalued" in the US vs the EU, by traditional financial metrics: https://www.sciencedirect.com/science/article/abs/pii/S0261560605000318

Over-capitalizing expenses is also one of the most common types of accounting fraud because it allows companies to defer expense recognition through depreciation or amortization, which inflates short-term profits and offsets future taxable income. This obviously might come in handy if you were trying to raise money by making some bad software that doesn't do anything but kinda sorta "works." In the year of our lord 2025, I don't feel good about the odds that IRS and/or SEC auditors will have the tools to consistently enforce GAAP standards for "technological feasibility" in SAFR No. 86:

"For purposes of this Statement, the technological feasibility of a computer software product is established when the enterprise has completed all planning, designing, coding, and testing activities that are necessary to establish that the product can be produced to meet its design specifications including functions, features, and technical performance requirements. At a minimum, the enterprise shall have performed the activities in either (a) or (b) below as evidence that technological feasibility has been established:

a. If the process of creating the computer software product includes a detail program design:

(1) The product design and the detail program design have been completed, and the enterprise has established that the necessary skills, hardware, and software technology are available to the enterprise to produce the product.

(2) The completeness of the detail program design and its consistency with the product design have been confirmed by documenting and tracing the detail program design to product specifications.

(3) The detail program design has been reviewed for high-risk development issues (for example, novel, unique, unproven functions and features or technological innovations), and any uncertainties related to identified high-risk development issues have been resolved through coding and testing.

b. If the process of creating the computer software product does not include a detail program design with the features identified in (a) above:

(1) A product design and a working model of the software product have been completed.

(2) The completeness of the working model and its consistency with the product design have been confirmed by testing."

It's easy to imagine LLMs creating even more opportunities to deceive auditors with fraudulent software capitalization, so I guess we just have to hope auditors can stay on the cutting edge of AI and whatnot.